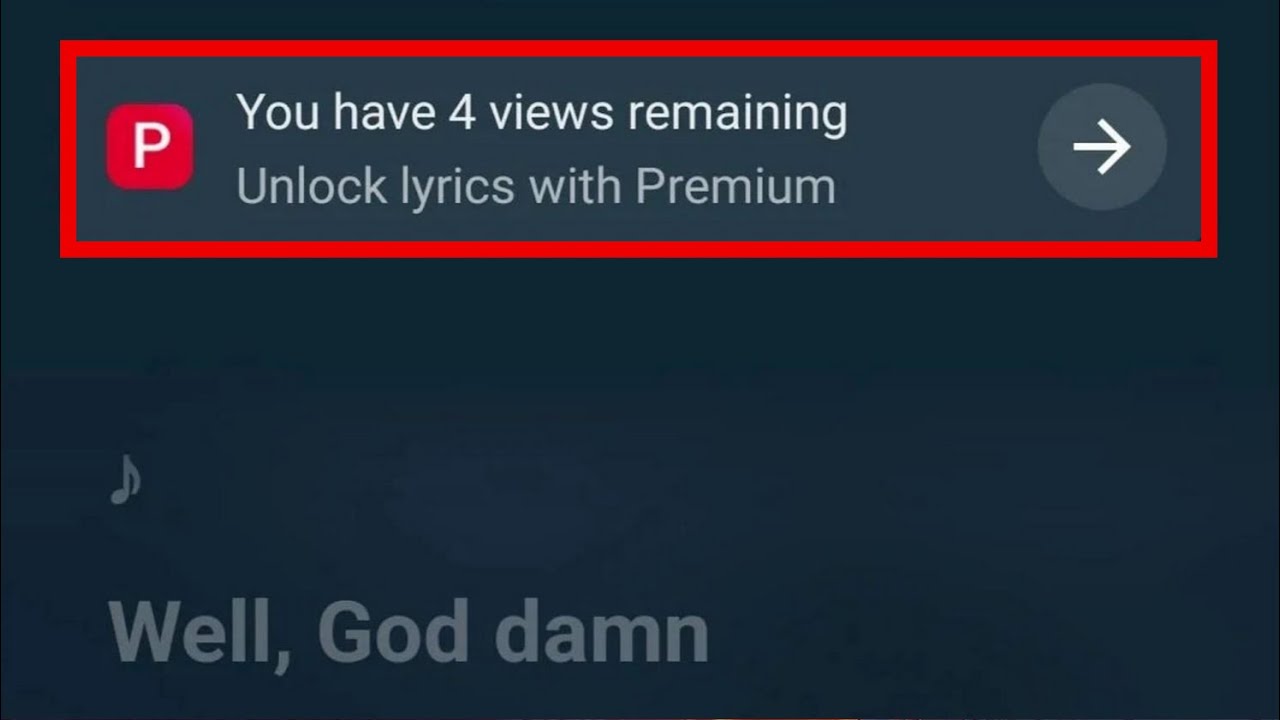

►YouTube Music lyrics are now paywalled behind a YouTube Premium subscription. You have to pay to see the full lyrics of any YouTube music song, whereas this feature was previously free. YouTube has also paywalled background play, faster video speeds, among many other features. Let me know what you guys think about this situation in the comments below.

YouTube is falling apart.

YouTube has had a rough year – ads getting ridiculous, age verification, AI terminating channels, being community noted on Twitter, the list goes on. Today, we’re gonna look at it all. This video has been a long time coming.

Beyond The Internet

YouTube AI is Getting Worse…

►YouTube’s CEO Neal Mohan is fighting the AI slop/moderation problem by adding more AI. Neal said YouTube will be expanding their AI moderation tools, and that they are getting more sophisticated every day. Let me know what you guys think about this situation in the comments below.

YouTube is Protecting This Channel.

►MrSlanderist has stolen multiple YouTube videos from me, and now he’s stealing Internet Anarchist’s videos as well. This AI slop channel has stolen countless titles, thumbnails, and descriptions. Let me know what you guys think about this situation in the comments below.

YouTube Is Broken (and Small Channels are Paying the Price)

Views are down across youtube. Is it restricted mode? Is it adblocker? Is it AI? Is it a new algorithm made to complete with TikTok? Either way, the smallest channels will be hit the most by this change.

YouTube Keeps Lying to Us.

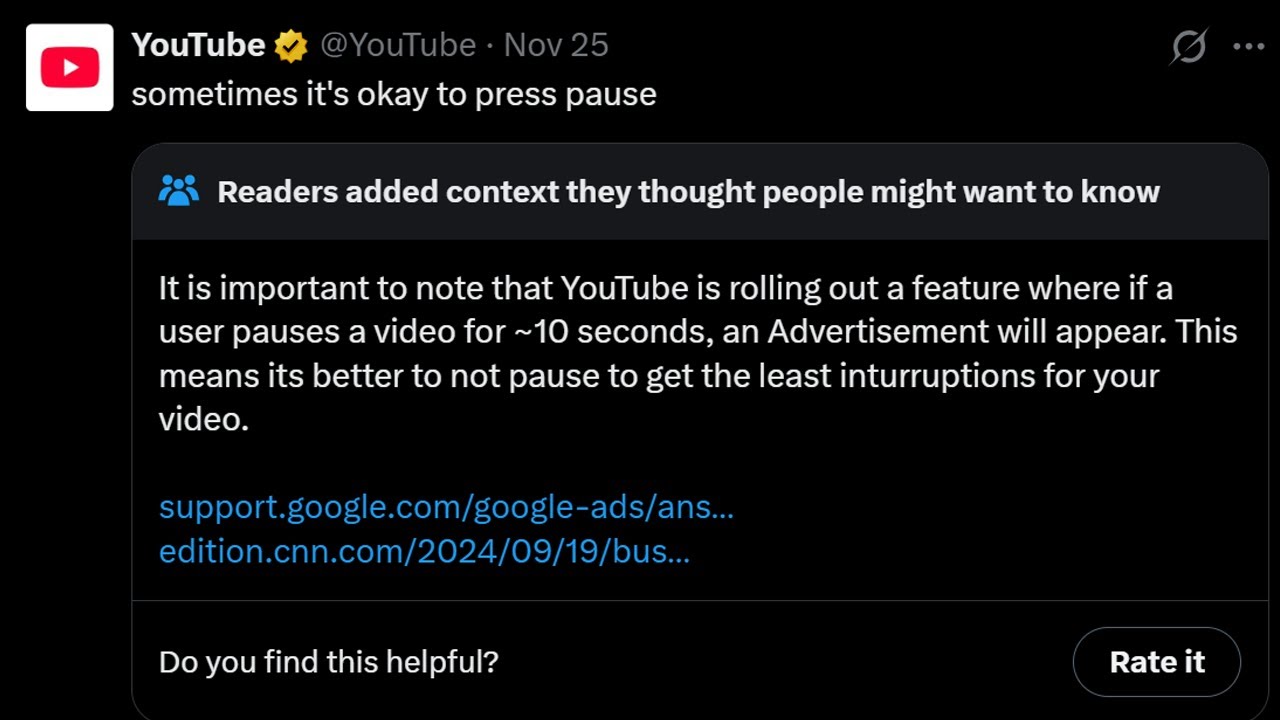

►YouTube is getting community noted on Twitter/X for their false bans/terminations wave with their AI moderation. YouTube is constantly lying about the involvement of AI in their moderation, and the community has finally had enough. Let me know what you guys think about this situation in the comments below.

Deep Humor

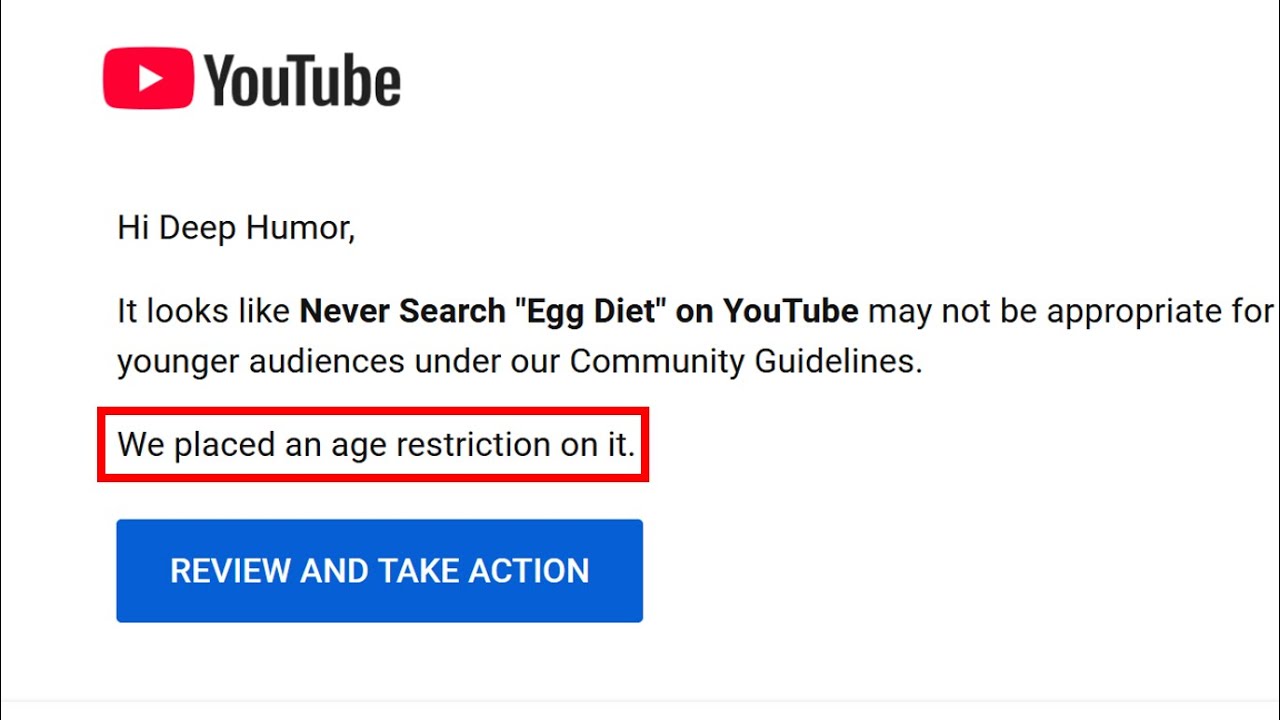

YouTube Wants Me Gone.

►YouTube age restricted a 7 month old video about an egg search term. YouTube has constantly age restricted copyright striked, claimed, demonetized, community guidelines striked, and claimed my videos throughout the years. Let me know what you guys think about this situation in the comments below.

Deep Humor

YouTube’s Competitor Asked Me to Join Them.

►YouTube’s Competitor Rumble video asked me to join them. Rumble’s content manager reached out and asked me to join their platform, likely because they’ve seen my videos criticizing YouTube.

Deep Humor

What do you think? Leave a comment!

YouTube Posted This By Mistake…

►YouTube AI moderation has been a big topic, with TeamYouTube insisting to me in the DMs that they have never and will never use automated AI bot responses. However, YouTube made some tweets today, which they have since deleted, that show the exact template they use to send mass replies to YouTubers. Let me know what you guys think about this situation in the comments below.

YouTube Finally Comes Clean.

►Deep Humor: YouTube comes clean on AI moderation, false ban/termination waves, bot usage, and more. They claimed that there were no bugs in their system that resulted in the bans, and that the vast majority of the bans were accurate. They also claimed that they would be interested in doing an interview with me.